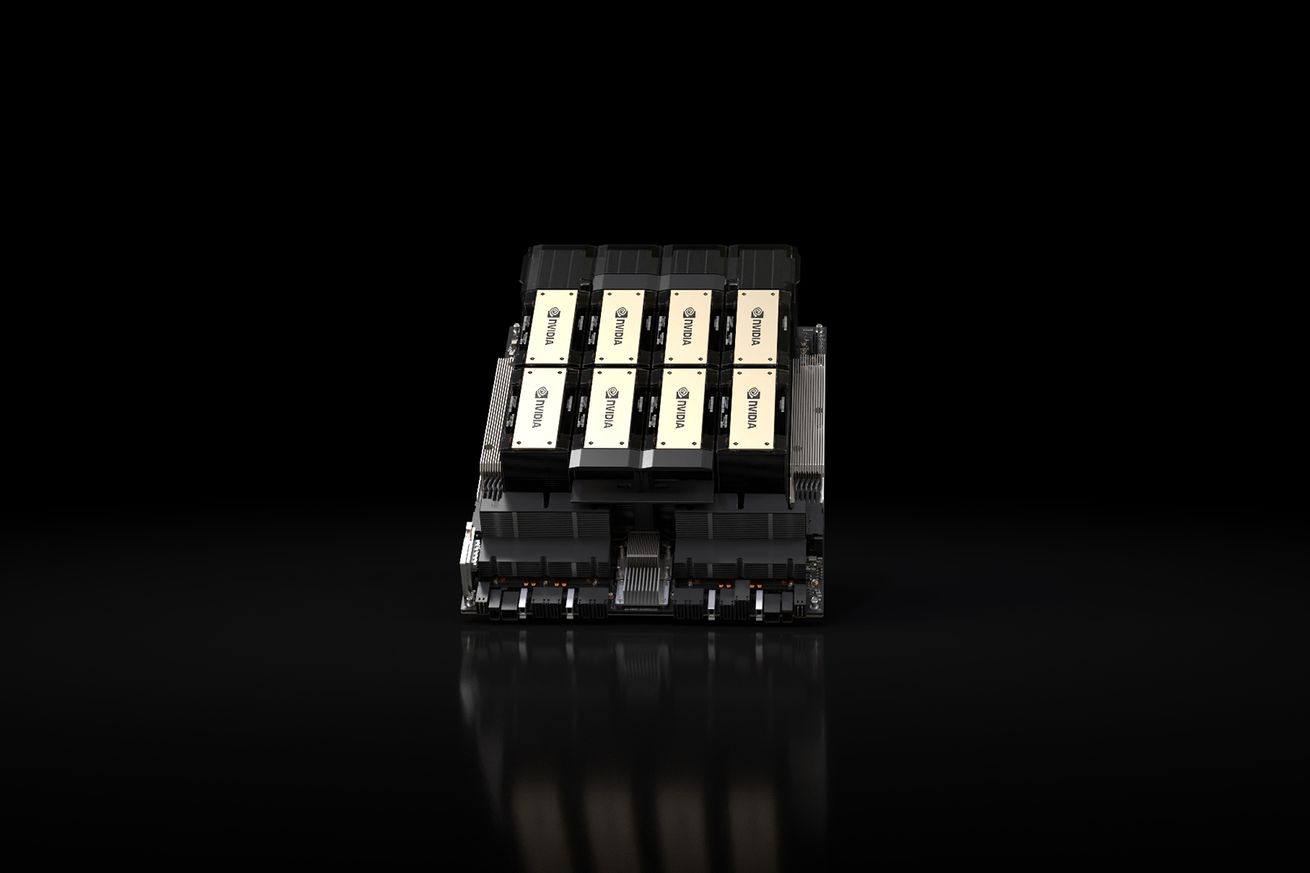

Nvidia’s New AI Chip: The HGX H200

Nvidia is gearing up to release the HGX H200, a state-of-the-art AI chip designed to enhance demanding generative AI tasks. The new GPU boasts improved memory capacity and bandwidth, promising accelerated performance for AI models and high-performance computing applications. The initial shipment is scheduled for the second quarter of 2024.

Enhanced Memory Capabilities

The H200 features a new memory spec, HBM3e, boosting its memory bandwidth to 4.8 terabytes per second and increasing its total memory capacity to 141GB. These enhancements are expected to contribute to faster and more efficient AI processing.

Compatibility and Pricing

The H200 is designed to be compatible with systems supporting H100s, ensuring a smooth transition for cloud providers. Leading providers like Amazon, Google, Microsoft, and Oracle are expected to offer the H200 GPUs. While pricing details are undisclosed, the chips are anticipated to be on the higher end.

High Demand for AI Chips

Nvidia’s announcement comes at a time when the demand for AI chips like the H100 is soaring. The scarcity of H100 chips has led to collaborative efforts and even using them as collateral for loans. With plans to triple H100 production in 2024, the demand for the new H200 chip is expected to be substantial.

Future Prospects

The continuous evolution of AI technology, fueled by advancements like the HGX H200, promises to reshape industries and drive societal progress. Nvidia’s cutting-edge chip signifies a new era in AI processing capabilities, paving the way for innovative applications and solutions.